From CAE to AI

-A Personal Perspective

Paved Roads

As a junior CAE engineer in the early 90s, I still remember the sense of wonder and gratitude I felt when I found "paved roads": it was so efficient when someone else provided a working methodology to make my simulations faster and more effective. This finding paved roads continued during my career in CAE in the last decades with the introduction of automatic meshing and the affordability of HPC either on-premise or in the Cloud.

Starting from the roaring 90s: I discovered a road to 3D fluid dynamics simulation paved for me by offerings of general-purpose CFD codes such as PHOENICS, FLOW3D, STAR-CD and FLUENT. That was already a revolution.

But when you work in an industrial environment you are always meeting increased pressure and new challenges.

The first challenge was how to simulate realistic geometries. It might sound silly to consider this as a challenge in 2020; however, at the time there was no paved road leading straight from CAD to CFD results.

The "dirt road" I had to take in the early 90s was the following...:

- Ask a friendly co-worker who can read some intermediate neutral CAD files.

- Take STL or similar surface format from FEA, e.g., PATRAN, HyperMesh or I-DEAS.

- Use it as a frame to build your volume mesh almost by hand.

No wonder, at the time, the role of CFD was mainly diagnostic! Someone came into your office with a known issue and asked for “an analysis” that would help to make it better the next time. Back then, it was fair to keep the rest of the company waiting a week or even a month for your analysis. After that, they took your report and went somewhere for their product changes or failure analyses.

Let us fast forward a few decades with Doc’s magic DeLorean: "Back to the Present".

Some of the above “CFD codes” of the early Nineties are nowadays at the core of full-cycle solution suites distributed by market giants. CFD / CAE solutions are embedded in all-encompassing digital representations of physical processes or products. As a positive consequence, there is no more dispersed and untimely communications on small pieces of the product. Simulation can be truly upfront, solving issues before they come up. Even better, CAE can contribute to the product concept or drive it when coupled to parametric or topological optimization tools that shape new ideas.

However, it is always never enough: the next challenge was Digital Twins. What are the opportunities in the new Digital Twin scenario, emerging since around 2015?

- First, each CAE user has hundreds, thousands, up to hundreds of thousands of simulation data stored somewhere. Cycles of design-space exploration are daily producing additional thousands of simulations per day around the world.

- Second, the Digital Twin means a digital version of reality, with several engineering fidelity levels. However, reality runs in real-time! Any user of accurate CAE knows that fidelity comes to a price, the price of time needed to get quality results.

So the claim of "having a digital twin" putting together CAD and high-end CAE (as heard from software vendors) is not 100% satisfied since the digital twin will have reaction times of several orders of magnitudes longer than reality. Drastic simplifications are needed such as Reduced-Order Modelling and Model Based System Engineering. With simplifications, you lose the richness of high-end CAE. Is there a way out?

The Rise of Deep Learning

Coming back to the “abundance of CAE data” I quoted before (hundreds to thousands of CAE file generated per user per year). Could this abundance of data be framed within the popular concept of Big Data / Data Science, and, how can we extract value from those Big Data? And, coming back to the need for real-time CAE: could one leverage professional-grade CAE data to produce some form of almost real-time response?

I had a premonition that Artificial Intelligence (AI) could help... but what kind of AI? Are 3D geometries and 3D simulations within the grasp of existing AI technologies?

Fortunately, the answer is yes - a new AI has emerged recently: “feature” learning. Feature learning is widely used in image classification and recognition. In this flavour of AI, no one is manually feeding the AI with features: instead, they emerge from raw data.

I will summarize the evolution of AI from “Symbolic” to “Deep Learning” using the example of board games. A well-known example of “good old fashioned” Symbolic AI is DeepBlue defeating a world champion in chess in 1996. Some 20 years later, AlphaGo, blending search trees with deep Artificial Neural Networks (ANNs), won over a top human Go player and although was considered to be totally impossible in the 90s.

A less playful and striking example of a Deep Learning application is

protein folding. Although not related to CAE, it has a couple of striking similarities. First, there is a connection between shape (=geometry) and function (=results). Second, it is hardware-intensive. One may say it is even more intensive than CFD; doing a random search would mean exploring something like 10^143 configurations - the so-called Levinthal paradox. Once you know the DNA sequence that generates a protein, you still do not know the final 3D folded shape. Scientists were looking for faster ways to predict a protein’s final folded shape and hence, what drugs to develop against disorders related to rogue misfolded proteins. Examples are Parkinson’s or Alzheimer’s diseases or diabetes. CASP (=Critical Assessment of Structure Prediction) is a community-wide experiment to determine and advance the state-of-the-art in modelling protein structure from amino acid sequences. As an outstanding application of Deep Learning, in 2018, DeepMind’s AlphaFold ranked first in the CASP13 competition. The next edition, CASP14, is due later this year.

Deep Learning can nowadays contribute to Advanced driver-assistance systems (ADAS). In short/mid-range distances, it can help to prevent collisions against pedestrians, cyclists, other vehicles (cars, trucks). Of course, you never want to collide; however, detecting the object type helps evaluate its specific intentions. This technology is of great help in the transition to future self-autonomous driving. In ADAS, Deep Learning is a way to extract features rising from an initially agnostic knowledge. There are no engineers designing algorithms for feature extraction based on their current (limited) experience. To be precise, Deep Learning facilitates image classification (this object is a pedestrian) and image detection (where are pedestrians in the scene?). Even semantic segmentation (understanding a view as a whole).

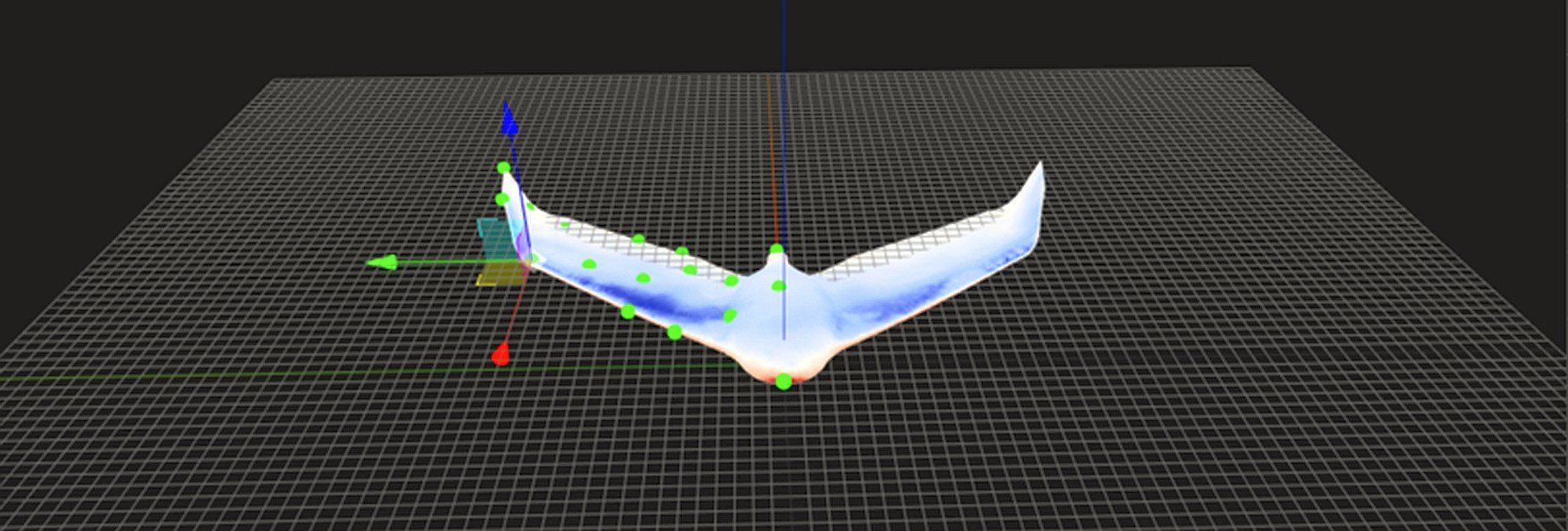

How does image classification work? You feed an initially agnostic ANN (our artificial neural network) with relevant images and labels. At the very beginning, you might show a pedestrian picture, and the ANN may say that it’s 90% a pedestrian and 10% a truck. This ignorance at the so-called “first epoch” of a Deep Learning process should not create any concern at all because the ANN progressively tunes its internal connections to optimize an error. The error is some metric expressing the distance between the provided labels and the predictions. The figure on top of the page is an example of an interior visualization of the geometric neural network.

Re-capping: the above process was the training phase for the ANN. Smartly, during training, you did not uncover all of your labels to the ANN. Now, it’s time for the ANN to test its knowledge based on provided images/labels. After completing training and testing, the ANN is production-ready for predictions with certain levels of confidence (inference). What is interesting is that Transfer Learning, a new relevant topic in Deep Learning, helps us (our ANN) apply what it learns on one item to another. For instance, you can transfer knowledge of cars to knowledge of trucks.

After I understood the above, my enthusiasm reached the stars. “Wow, so I could feed an ANN with images (CAD) and labels (CAE results). Train it, test it, and get a productive AI. AI fed with new geometries will predict results”. OK, but… here we meet TANSTAAFL!

TANSTAAFL: “There ain’t no such thing as a free lunch”. To construct a trained ANN (a surrogate model), you need, as the word says, to train it on a specific problem. It requires a dataset (say, 50 to 1000 simulations) and some GPU time (overnight or within 24 hours on a cluster). And another point: you can be ambitious, but not at the cost of improper generalizations. If I fancied to “predict in general” starting from “any geometry”, you would need practically infinite memory and GPU resources in terms of datasets and training time.

Paved Road of the Twenties?

In analogy to the times (during my stint as CFD newbie) when I was looking for a “paved road” in CFD, I recently wondered if I could find a “paved road” with Deep Learning in CAE. I was looking for a solution that would allow me to extract geometries and attached CAE results, train an ANN and get a surrogate model. Predictions by the surrogate models could be virtually instantaneous. This would spawn portentous advantages: AI embedded in loops of design space exploration, optimization, Fluid-Structure interaction, and even almost real-time diagnostics and connection to smart devices. All this, based on quality results (high-fidelity simulations) used during the training phase!

At this point, in my search for my new Paved Road, I came across Neural Concept.

What happened next?

Well, that’s another story!

Bibliography

[1] H.K. Versteeg, W. Malalasekera. (1995). “An Introduction to Computational Fluid Dynamics. The Finite Volume Method”. Longman Scientific and Technical, ISBN 0-470-23515-2 [1995 Edition, a description of general-purpose CFD in the early Nineties]

[2] “On Deep Learning and Multi-Objective Shape Optimization”, Neural Concept Post

[3] Pierre Baqué, Edoardo Remelli, Francois Fleuret, Pascal Fua. (2018). “Geodesic Convolutional Shape Optimization”. Proceedings of the 35th International Conference on Machine Learning, PMLR 80:472-481.

[4] Protein Structure Prediction Centre (http://www.predictioncenter.org/)

[5] A. Senior, J. Jumper, D. Hassabis, P. Kohli. (2020). “AlphaFold: Using AI for scientific discovery” (https://deepmind.com/blog/article/AlphaFold-Using-AI-for-scientific-discovery).

[6] Fujiyoshi, Hironobu & Hirakawa, Tsubasa & Yamashita, Takayoshi. (2019). Deep learning-based image recognition for autonomous driving. IATSS Research. 43. 10.1016/j.iatssr.2019.11.008.

[7] Robert A. Heinlein. (1966). “The Moon is a Harsh Mistress” [on TANSTAAFL in my loose interpretation]